Tesla's Fatal Crash: 6 Unanswered Questions

7/7/2016

MADISON, Wis.—Two months after a Tesla’s Model S on Autopilot mode killed a driver, the fatal crash still has many automotive industry experts wondering what exactly happened and why the self-driving system failed.

Of course, the accident was a surprisingly well-kept secret until last week. This delay begs the questions of when Tesla knew, and why did the firm, together with the federal agency, wait nearly two months to give the tragedy its due publicity.

Although Tesla learned about the May 7 crash in Florida "shortly" afterward, it did not disclose it to the government until May 16. It was June 30 when the National Highway Traffic Safety Administration (NHTSA) announce its probe into the fatality.

Separately, it’s been revealed that there was another Tesla crash on July 1, involving a Model X that rolled over on the Pennsylvania Turnpike. NHTSA just announced on Wednesday (July 6) that it’s investigating the Pennsylvania accident to determine if automated functions were involved.

More questions than answers

Without Tesla’s full disclosure and results from NHTSA investigations, nobody can say for sure what caused the fatal crash on May 7.

Several automotive experts reached by EE Times are coming up with more questions than answers. Among their questions:

- Which part of Tesla’s autopilot system failed to recognize the imminent danger of a white trailer truck? CMOS image sensor, radar, vision processor?

- How is Tesla’s Autopilot system designed to interpret sensory data?

- Which hardware was responsible for sensor fusion?

- Who wrote the sensor fusion algorithms – determining which data over-rides another, and what happens when contradictory information comes from different sensors?

- More specifically, how did Tesla integrate Mobileye’s EyeQ3 vision processor into their Automatic Emergency (AEB) system?

- Algorithms perception systems are getting pretty good. But how far along has the automotive industry come with algorithms for motion planning?

- There are many corner cases (like the Tesla case) that are almost impossible to test. Considering the infinite number of potential scenarios that could lead to a crash, how does the car industry plan to meet the challenge of modeling, simulation, test and validation?

It’s premature to point a finger at any specific technology failure as the culprit. But it’s time for the automotive industry -- technology suppliers, tier ones and car OEMs – to start discussing the limitations of driving on autopilot.

This was suggested by Amnon Shashua, Mobileye's co-founder, CTO and chairman. He said during the BMW/Mobileye/Intel press conference last Friday: “Companies need to be very transparent about limitations of the system. It’s not enough to tell the drivers to be alert, it needs to tell them why they need to be alert.”

In pursuit of such transparency the following pages are an attempt to dissect which technologies were at play inside Model S Autopilot, and what limitations each technology was manifested in the crash.

Next page: What did the front camera actually see?

First, Tesla Model S is equipped with sensing hardware that includes front radar, a front monocular optical camera, Mobileye’s EyeQ3 vision SoC, and a 360-degree set of ultrasonic sensors. The vehicle also has loads of computing power. It uses Nvidia modules – one based on Tegra 3 processor for media control in the head unit and another based on Tegra 2 for instrument control.

1. What did the front-camera actually see just before the crash?

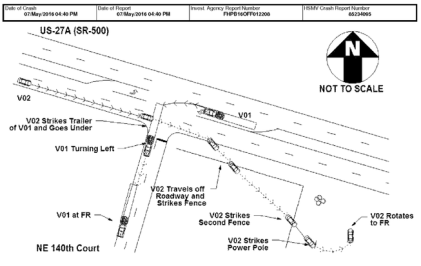

This is the first question that came to everyone’s mind. When a tractor-trailer made a left turn in front of the Tesla at an intersection on a non-controlled access highway, what did Tesla’s front-camera exactly see?

1. What did the front-camera actually see just before the crash?

This is the first question that came to everyone’s mind. When a tractor-trailer made a left turn in front of the Tesla at an intersection on a non-controlled access highway, what did Tesla’s front-camera exactly see?

Direct sunlight flashing in front of the camera could have caused the CMOS image sensor not to see the truck clearly, said one industry analyst. “Of course, it all depends on the CMOS image sensor’s sensitivity and contrast.”

Phil Magney, founder & principal advisor of Vision Systems Intelligence, LLC., told us, “It’s odd that the camera could not identify the truck.” At 4:30PM on May 7 in Florida, when Tesla crashed, the effect of the sun on the front camera is unknown. But Magney added, “My guess is that the vision sensor [EyeQ3] simply didn’t know how to classify” whatever the camera saw.

“The cameras are programmed to see certain things (such as lanes) but ignore others that they cannot identify.”

It appears that the camera ignored what it couldn’t classify.

2. Why didn’t radar see the white truck?

Vision Systems Intelligence’s Magney made it clear, “The radar did recognize the truck as a threat. Radar generally does not know what the object is but radar does have the ability to distinguish certain profiles as a threat or not, and filter other objects out.”

If so, what was radar thinking?

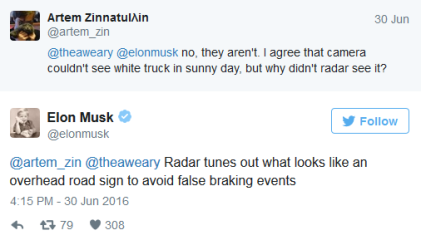

Tesla’s blog post followed by Elon Musk’s tweet give us a few clues as to what Tesla believes the radar saw. Tesla understands that vision system was blinded (the CMOS image sensor was seeing “the white side of the tractor trailer against a brightly lit sky”). Although the radar shouldn’t have had any problems detecting the trailer, Musk tweeted, “Radar tunes out what looks like an overhead road sign to avoid false braking events.'"

Mike Demler, a senior analyst at The Linley Group, disagrees.

He told EE Times that if Tesla’s theory is correct, “Tesla has some serious problems in their sensor system and software.”

Demler noted, “According to the Department of Transportation, overhead signs should be a minimum of 17 feet above the pavement, and most of them are green. The Tesla Model S involved in the crash is only 56.5 inches tall, and it hit the underside of the truck.”

He added, “The radar may have seen open road under the truck, but it should have indicated the distance and height was inconsistent with a sign.”

Demler doesn’t believe radar was at fault.

He explained that radar is “most commonly used for adaptive cruise control. It prevents rear-end collisions by detecting the distance to a vehicle up ahead. For autonomous vehicles, radar can provide longer distance object detection than cameras and Lidar.”

“Radar doesn’t provide detail for object identification,” he acknowledged. But Radar also provides better sensing at night and through fog and precipitation, headed.

Demler pointed to a statement Tesla issued to the media last Friday, which explained that its autopilot system “activates automatic emergency braking in response to any interruption of the ground plane in the path of the vehicle that cross-checks against a consistent radar signature.”

In that case, Demler said, “I suspect the radar missed the truck because it is focused too close to the ground and only saw the opening.”

3. Which hardware was responsible for sensor fusion?

Experts we talked to agree that Mobileye’s EyeQ3 is capable of handling camera/radar fusion. But it’s not clear if EyeQ3 chip was used in Tesla for this purpose.

It has never been made clear what parts of the Autopilot are built by Tesla and Mobileye, other than Tesla’s Autopilot is using Mobileye’s EyeQ chip.

A Tesla statement Tesla last Friday said:

Experts we talked to agree that Mobileye’s EyeQ3 is capable of handling camera/radar fusion. But it’s not clear if EyeQ3 chip was used in Tesla for this purpose.

It has never been made clear what parts of the Autopilot are built by Tesla and Mobileye, other than Tesla’s Autopilot is using Mobileye’s EyeQ chip.

A Tesla statement Tesla last Friday said:

Tesla’s autopilot system was designed in-house and uses a fusion of dozens of internally- and externally-developed component technologies to determine the proper course of action in a given scenario.

Since January 2016, Autopilot activates automatic emergency braking in response to any interruption of the ground plane in the path of the vehicle that cross-checks against a consistent radar signature. In the case of this accident, the high, white side of the box truck, combined with a radar signature that would have looked very similar to an overhead sign, caused automatic braking not to fire.

Vision Systems Intelligence’s Magney told us, “I think most of the perception for Autopilot is done by camera. But it does use radar for confirmation. It is also my understanding that the emergency braking solution in the Tesla requires a confirmation of both the radar and the camera.”

In summary, combining two items of false sensory data together did not trigger the Autopilot system to take any action.

The Linley Group’s Demler believes that the more pertinent question should be “how Tesla integrated EyeQ3 into its own Automatic Emergency Braking (AEB) system.”

Tesla’s description of how its AEB works does not align with Mobileye’s statement.

Mobileye’s chief communication officer Dan Galves told the media that “today’s collision avoidance technology, or Automatic Emergency Braking (AEB) is defined as rear-end collision avoidance, and is designed specifically for that.”

In Mobileye’s opinion, the Tesla’s crash “involved a laterally crossing vehicle, which current-generation AEB systems are not designed to actuate upon.” Galves said, “Mobileye systems will include Lateral Turn Across Path (LTAP) detection capabilities beginning in 2018, and the Euro NCAP safety ratings will include this beginning in 2020.”

Tesla, however, insisted that its own Autopilot system is designed to trigger AEB regardless where — rear or front – it senses trouble. The AEB activates “in response to any interruption of the ground plane in the path of the vehicle.”

In summary, Demler said that the problem [of sensor fusion in Tesla] appears to have been in Tesla’s implementation.

4. Who writes perception software?

It is entirely possible that the crash was caused by “perception software” that wasn’t capable of deciphering what it’s sensing. Who actually writes those algorithms?

The Linley Group’s Demler said, “It depends.”

He explained that Mobileye sells after-market systems as complete packages, but they generally work with the tier ones to develop the systems for new cars.

Meanwhile, “Tesla acts as their own Tier 1, so they developed their own software.” Demler added, “There are several software companies like ADASworks that specialize in this.”

Indeed, Tesla created a league of its own. Behaving more like a tech company rather than a traditional carmaker, Tesla famously skips working with tier ones. This policy is turning into a trend. In the recently announced alliance of BMW, Intel and Mobileye, no tier ones are included.

In summary, combining two items of false sensory data together did not trigger the Autopilot system to take any action.

The Linley Group’s Demler believes that the more pertinent question should be “how Tesla integrated EyeQ3 into its own Automatic Emergency Braking (AEB) system.”

Tesla’s description of how its AEB works does not align with Mobileye’s statement.

Mobileye’s chief communication officer Dan Galves told the media that “today’s collision avoidance technology, or Automatic Emergency Braking (AEB) is defined as rear-end collision avoidance, and is designed specifically for that.”

In Mobileye’s opinion, the Tesla’s crash “involved a laterally crossing vehicle, which current-generation AEB systems are not designed to actuate upon.” Galves said, “Mobileye systems will include Lateral Turn Across Path (LTAP) detection capabilities beginning in 2018, and the Euro NCAP safety ratings will include this beginning in 2020.”

Tesla, however, insisted that its own Autopilot system is designed to trigger AEB regardless where — rear or front – it senses trouble. The AEB activates “in response to any interruption of the ground plane in the path of the vehicle.”

In summary, Demler said that the problem [of sensor fusion in Tesla] appears to have been in Tesla’s implementation.

4. Who writes perception software?

It is entirely possible that the crash was caused by “perception software” that wasn’t capable of deciphering what it’s sensing. Who actually writes those algorithms?

The Linley Group’s Demler said, “It depends.”

He explained that Mobileye sells after-market systems as complete packages, but they generally work with the tier ones to develop the systems for new cars.

Meanwhile, “Tesla acts as their own Tier 1, so they developed their own software.” Demler added, “There are several software companies like ADASworks that specialize in this.”

Indeed, Tesla created a league of its own. Behaving more like a tech company rather than a traditional carmaker, Tesla famously skips working with tier ones. This policy is turning into a trend. In the recently announced alliance of BMW, Intel and Mobileye, no tier ones are included.

Next page: What about algorithms for motion planning?

5. What about algorithms for motion planning?

Sensor fusion is vital to getting things right and avoiding catastrophes, as Vision Systems Intelligence’ Magney explained. But what about algorithms for motion planning, he asked.

In his opinion, “sensing for safety systems is pretty good and the sensor technologies to support it are also pretty good… However, as you move up the ladder of autonomy, the challenges grow exponentially.”

More specifically, “there are a lot of other hard problems related to motion planning.” Magney said, “For example, once you have detected a scene, what do you do next is critical – how much steering angle, how much torque, how to factor in low grip conditions, etc.”

Mobileye, which already holds the lion’s share in the vision SoC market, is positioning itself as the super SoC that combines processing for camera, radar and Lidar. Elchanan Rushinek, Mobileye’s senior vice president of Engineering, in a recent interview with us, explained what’s inside its upcoming EyeQ5 scheduled to sample in 2018.

However, it isn’t likely that such a super fusion sensor will also have room for for motion planning algorithms. Given where NXP’s Bluebox and Audi’s zFAS are heading, Magney envisions a platform featuring multiple processors, each partitioned with different domain responsibilities. Such domains include safety, motion controls and decision making, in addition to perception sensor fusion.

6. How to handle corner cases

What the Tesla crash exposed isn’t just the limits of individual sensor hardware or software. That’s a given. Rather, it exposed Tesla’s failure to prepare its system for an unanticipated event, or for multiple failures within its system.

For example, who at Telsa imagined that its own vehicle sensor would have problems distinguishing a white trailer truck from an overhead road sign?

Did Tesla engineers foresee the possibility that a truck situated perpendicular to the car’s path neither approaching the car or pulling away, would manifest the Doppler speed signature of a stopped object?

Further, what was the planned reaction of Tesla’s Autopilot system when multiple sensors failed to decipher what it’s detecting?

“Sensor fusion requires a lot of steps in development --- modeling, simulation, test and validation before you can deploy them,” said Magney. “There are so many corner cases (like the Tesla case). It is almost impossible to test for every possibility.”

Making Autopilot driving work 99 percent of the time might be possible. “But to improve the probability to 99.99 percent, it takes a monumental task,” Magnery noted.

Considering an infinite number of potential scenarios that could lead to a crash, the automotive industry needs an agreed-upon methodology for modeling, simulation, test and validation.

Duke University robotics professor Mary Cummings testified at a U.S. Senate hearing in March that the self-driving car community is “woefully deficient” in its testing programs, “at least in the dissemination of their test plans and data.”

In particular, she said she’s concerned about the lack of “principled, evidenced-based tests and evaluations.”

We know that Google is doing its own thing. Tesla is certainly pursuing its dream. Mobileye has its own, jealously guarded sensor algorithms. But when it comes to testing and validation of autonomous driving, these industry leaders need to find a way to cooperate.

Sensor fusion is vital to getting things right and avoiding catastrophes, as Vision Systems Intelligence’ Magney explained. But what about algorithms for motion planning, he asked.

In his opinion, “sensing for safety systems is pretty good and the sensor technologies to support it are also pretty good… However, as you move up the ladder of autonomy, the challenges grow exponentially.”

More specifically, “there are a lot of other hard problems related to motion planning.” Magney said, “For example, once you have detected a scene, what do you do next is critical – how much steering angle, how much torque, how to factor in low grip conditions, etc.”

Mobileye, which already holds the lion’s share in the vision SoC market, is positioning itself as the super SoC that combines processing for camera, radar and Lidar. Elchanan Rushinek, Mobileye’s senior vice president of Engineering, in a recent interview with us, explained what’s inside its upcoming EyeQ5 scheduled to sample in 2018.

However, it isn’t likely that such a super fusion sensor will also have room for for motion planning algorithms. Given where NXP’s Bluebox and Audi’s zFAS are heading, Magney envisions a platform featuring multiple processors, each partitioned with different domain responsibilities. Such domains include safety, motion controls and decision making, in addition to perception sensor fusion.

6. How to handle corner cases

What the Tesla crash exposed isn’t just the limits of individual sensor hardware or software. That’s a given. Rather, it exposed Tesla’s failure to prepare its system for an unanticipated event, or for multiple failures within its system.

For example, who at Telsa imagined that its own vehicle sensor would have problems distinguishing a white trailer truck from an overhead road sign?

Did Tesla engineers foresee the possibility that a truck situated perpendicular to the car’s path neither approaching the car or pulling away, would manifest the Doppler speed signature of a stopped object?

Further, what was the planned reaction of Tesla’s Autopilot system when multiple sensors failed to decipher what it’s detecting?

“Sensor fusion requires a lot of steps in development --- modeling, simulation, test and validation before you can deploy them,” said Magney. “There are so many corner cases (like the Tesla case). It is almost impossible to test for every possibility.”

Making Autopilot driving work 99 percent of the time might be possible. “But to improve the probability to 99.99 percent, it takes a monumental task,” Magnery noted.

Considering an infinite number of potential scenarios that could lead to a crash, the automotive industry needs an agreed-upon methodology for modeling, simulation, test and validation.

Duke University robotics professor Mary Cummings testified at a U.S. Senate hearing in March that the self-driving car community is “woefully deficient” in its testing programs, “at least in the dissemination of their test plans and data.”

In particular, she said she’s concerned about the lack of “principled, evidenced-based tests and evaluations.”

We know that Google is doing its own thing. Tesla is certainly pursuing its dream. Mobileye has its own, jealously guarded sensor algorithms. But when it comes to testing and validation of autonomous driving, these industry leaders need to find a way to cooperate.

Next page: What will happen next?

What will happen next?

Beyond testing and validation, many automotive industry analysts agree that more redundancy in sensors is the key to safer systems.

Beyond image sensors and radar, they mentioned Lidar, V2X and high definition maps as additional sensors. Magnery said, “An integrated high-attribute map could have helped too, as stationary physical objects and their precise locations can be used to improve sensor confidence rather than filtering them out.”

Luca De Ambroggi, principal analyst, automotive semiconductor at IHS Technology, noted, as the industry goes forward, “redundancy in sensors is a must.” He added that redundancy will be required also for other electronics components to ensure a fallback solution in case of failure.

Beyond testing and validation, many automotive industry analysts agree that more redundancy in sensors is the key to safer systems.

Beyond image sensors and radar, they mentioned Lidar, V2X and high definition maps as additional sensors. Magnery said, “An integrated high-attribute map could have helped too, as stationary physical objects and their precise locations can be used to improve sensor confidence rather than filtering them out.”

Luca De Ambroggi, principal analyst, automotive semiconductor at IHS Technology, noted, as the industry goes forward, “redundancy in sensors is a must.” He added that redundancy will be required also for other electronics components to ensure a fallback solution in case of failure.

Further, De Ambroggi suspects car OEMs will start asking for “ASIL D” – Automotive Safety Integrity Level D – certification in technology used in autonomous driving vehicles.

ASIL D refers to the highest classification of initial hazard (injury risk) defined within ISO 26262. “ASIL D will require a much stricter control and documentation on system functional safety,” De Ambroggi said.

But there is one more thing to consider, said Demler.

I don’t think it’s just a matter of the number of sensors. I think the problem is that Elon Musk and Tesla have been too cavalier about using human drivers as autopilot beta testers, which is what their own documents call them. They say drivers are “required” to keep their hands on the wheel, when clearly they are not. From a technical point-of-view, the system they’ve implemented is only capable of partial autonomous driving, under a very limited set of conditions, yet they call it autopilot.

ASIL D refers to the highest classification of initial hazard (injury risk) defined within ISO 26262. “ASIL D will require a much stricter control and documentation on system functional safety,” De Ambroggi said.

But there is one more thing to consider, said Demler.

I don’t think it’s just a matter of the number of sensors. I think the problem is that Elon Musk and Tesla have been too cavalier about using human drivers as autopilot beta testers, which is what their own documents call them. They say drivers are “required” to keep their hands on the wheel, when clearly they are not. From a technical point-of-view, the system they’ve implemented is only capable of partial autonomous driving, under a very limited set of conditions, yet they call it autopilot.

Tesla Auto Club touts Tesla Model S's Autopilot. In a virtually empty highway, it looks smooth and almost invincible.

— Junko Yoshida, Chief International Correspondent, EE Times

(selected comments):

Re: In my humble opinion

junko.yoshida 7/8/2016 11:16:06 AM

Olaf, that's a good point. No, owners don't read thick manuals anymore. Everyone expects

"out of the box" experience. If you had to look it up in the manual or searching answers in

wikihow, yeah, that's definitely a turn off.

What actually bothers me about this case is that the whole Tesla "fanboy" community really

encouraged reckless driving behaviors -- i.e. using ADAS system as "autonomous" hands-

free driving. Posting their own "look ma, no hands" video clip on YouTube, enthralled by

the number of clicks it gets. On top of that, he gets "retweet" from Elon Musk, which makes

the driver feel as though he can die in heaven.

You know how the circular nature of social media goes. Everyone follows what everyone

else seems to be doing, and kids himself that it's OK to drive his Tesla hands-free.

Calling ADAS as "Autopilot" is the biggest misnomer of this decade in my humble opinion.

Copyright © 2016 UBM All Rights Reserved

----------------------------------------------

Re: good article

junko.yoshida 7/8/2016 11:03:28 AM

Eric, thanks for chiming in. This msg coming from you -- really one of the pioneers of CMOS

image sensors -- means a lot to us.

Yes, I am sure that Tesla and all the technologies suppliers have a better handle of what

went wrong. But you raise a good question here.

If you, as a driver, are blinded by the sun, you slow down -- rather than telling yourself,

"I can't classify what's in front of me."

The car manufacturer -- and tech suppliers, too -- must do a much better job in spelling out

the limitations of their products.

The public at this day and age tends to be too trusting of technologies

.

Copyright © 2016 UBM All Rights Reserved

----------------------------------------------

Re: Truck safety regulations

sixscrews 7/7/2016 7:41:00 PM

Trucks do have rear crash barriers but not side barriers although I have seen more and

more trailers with side airfoils intended to reduce aerodynamic drag - reinforcing these as

crash barriers would be a no-brainer. On the other hand, no matter what kind of barrier you

have a 65 mph impact is likely to be lethal or at least result in serious injury to the front seat

occupants.

I appreciate the endorsement of certification of automitive software. Carmakers and others

will carry on about gov't interference but if you had a chance to read some of the code in

the Toyota TCM it would make your eyes spin - sphagetti code doesn't even begin to

describe it. I wrote better code (I think) back in 1978 when we were using punch cards.

There are lots of excellent software engineers and managers who know how to get them to

produce excellent code - all it takes is for the auto industry to get smart and hire them.

But they are cheap and dumb, IMHO, and more focused on marketing than product

development (with some exceptions - Ford's focus on turbochargers is impressive but I

have no idea what their software looks like).

And then there is Volkswagen's diesel emission cheat software - you can do all kind of

things in code provided nobody but your supervisors look at it.

wb/ss

Copyright © 2016 UBM All Rights Reserved

----------------------------------------------

Re: Who is responsible for the accident?

sixscrews 7/7/2016 4:47:40 PM

Your comments are dead on - what was going on here?

However, if an autopilot system cannot compensate for stupid

drivers of other vehicles on the same roadway then it's worse

than useless - it's a loaded weapon pointed straight at the

head of the vehicle operator - whose primary responsibility is

to keep aware of the situation.

So, if Tesla is marketing this as a 'play your DVD and let us do

the driving' then they are worse than fools - they are setting

their clients up for fatal crashes.

At this time there is no software that can anticipate all the

situations that will occur on a roadway and anyone who claims

otherwise is ignorant of the state of software circa 2016.

And this brings me to one of my favoride dead horses – the

NHTSA has no authority to certify vehicular control software

and this allows manufacturers to release software the is

developed by a bunch of amatuers with no idea of the

complexities of real time control systems (see Toyota throttle

control issues revealed in the Oklahoma case 18 months ago).

Congress - or whatever group of clowns that masquerade as

Congress these days - must implement - or allow NHTSA or

another agency- to create set of regulations similar to those

applying to aircraft flight control systems. A vehicle operating

in a 2-D environment is just as dangerous as an aircraft

operating in a 3-D environment and any software associted

with either one should be subject to the same rules.

Automakers are behaving as if the vehicles they manufacture

are still running on a mechanical distributor-points-condensor-

coil system with a driver controlling manifold pressue via the

'gas pedal' as was true in 1925. They fought air bags,

collapasible steering columns, safety glass and dozens of

other systems from the '20s to the present. Enough is enough.

Mine operators who intentionally dodge safety rules spend

time behind bars (or maybe - provided the appeals courts

aren't controlled by their friends). So why shouldn't

automakers be subject to the same penanties? Kill your

customer - go to prison, do not pass GO, do not collect $200.

The engineering of automobiles is not a game of lowest bidder

or chepest engineer; given the cost of vehicles today it should

be the primary focus of company executives, not something to

be passed off as an annoyance and cost center with less

improtance than marketing.

It's time for automakers to step up and take responsibility for

the systems they manufacture and live with certification of the

systems that determine life or death for thier customers.

wb/ss

Copyright © 2016 UBM All Rights Reserved

===============================

Tesla Crashes BMW-Mobileye-Intel

Event

Recent auto-pilot fatality casts pall

Junko Yoshida, Chief International

Correspondent

7/1/2016 05:32 PM EDT

---------------------------------------------

(selected comment - by the article author):

Re: Need information

junko.yoshida 7/2/2016 7:18:45 AM

@Bert22306 thanks for your post.

I haven't had time to do a fully story but here's what we know

now:

You asked:

1. So all I really want to know is, why did multiple redundant

radar and optical sensors not see the broad side of a

trailer?

Tesla Model S doesn't come with "multiple redundant radar

and optical sensors." It has one front radar, one front

monocular Mobileye optical camera, and a 360-degree set of

ultrasonic sensors.

In contrast, similar class cars like Mercedez Benz comes with

a lot more sensing hardware devices including short-range

radar, multi-mode radar, stereo optical camera in addition to

what Tesla model S has.

But that's besides the point. More sensing devices obviously

help but how each carmaker is doing sensor fusion and what

sort of algorithms are at work are not known to us. There are

no good yardsticks available to compare different algorithms

against one another.

2. Must have been a gymongous radar target, no?

Yes, the truck was a huge radar target. But according to Tesla's

statement, "The high, white side of the box truck, combined

with a radar signature that would have looked very similar to

an overhead sign, caused automatic braking not to fire."

In other wrods, Tesla's autopilot system believed the truck was

an overhead sign that the car could pass beneath without

problems.

Go figure.

3. Why the driver didn't notice is easily explained.

There have been multiple reports that the police found a DVD

player inside the Tesla driver's car. There have been

suggestions -- but it isn't confirmed -- that the driver might have

been watching a film when the crash happened. But again,

we don't know this for sure.

4. Even though this was supposed to be just assisted

driving, one might assume that the driver was distracted

You would think. It's important to note, however, that this driver

killed in Tesla autopoiot carsh has been known to be a big

Tesla fan, and he posted a number of a viral video on his

Model S.

Earlier this year, he posted one video clip showing his Tesla

avoiding a close call accidet with autopilot engaged. (Which

obviously got an attention and a tweet back from Elon Musk...)

Here's the thing. Yes, what autopilot did in that video is cool,

but it's high time for Musk to start tweeting the real limitations

of his autopilot system.

5. Saying things like "We need standards" is so peripheral!

I beg to differ, Bert.

As we try to sort out what exactly happened in the latest Tesla

crash, we realize that there isn't a whole lot of information

publicly available.

Everything Google, Tesla and others do today remain private,

we have no standards to compare them against. The

autonomous car industry is still living in the dark age of siloed

commuity.

I wrote this blog because I found interesting what Mobileye's

CTO had to say in the BMW/Mobileye/Intel press conference

Friday morning. There were a lot of good nuggets there.

But one of the things that struck me is this: whether you like it

or not, auto companies must live with regulations. And

"regulators need to see the standard emerging," as he put it.

As each vendor develops its own set of sensor fusing, own set

of software stack, etc., one can only imagine complexities

multiplying in standards for testing and verifying autonomous

cars.

Standards are not peripheral. They are vital.

Copyright © 2016 UBM All Rights Reserved

===============================